Deepfakes & Fraud Prevention in Biometrics

Looking at biometrics and their relevance regarding the internet and online presence, identity fraud is an important issue to mention. To date, most people do not have a clear understanding of the potential risks in today’s technologically advanced world. For that very reason it is essential to clarify and explain one of the terms associated with fraud: Deepfakes. This phenomenon is an example of a possible threat to peoples’ digital identities.

Looking at biometrics and their relevance regarding the internet and online presence, identity fraud is an important issue to mention. To date, most people do not have a clear understanding of the potential risks in today’s technologically advanced world. For that very reason it is essential to clarify and explain one of the terms associated with fraud: Deepfakes. This phenomenon is an example of a possible threat to peoples’ digital identities.

What are Deepfakes?

Deepfakes refer to fabricated videos, images, and occasionally even altered audio clips generated through artificial intelligence techniques to alter the appearances, actions, or voices of individuals in various ways. Often the line between reality and fake is therefore blurred, leaving plenty of room for any kind of manipulation and fraud. Especially with the intent to deceive and defame their victims, deepfakes are turning into dangerous cyber weapons. Those victims include not only celebrities, or politicians but increasingly also private people.

With technology and software evolving constantly, the creation of deepfakes has reached an all time high. Not only is the quality of the forged videos or photos better than never before, it also has become quite easy to create deepfakes with only limited image material available and little knowledge about the technology behind.

The deepfake video shown above for instance was created by one of our team members with a free online tool using only one image. Would you be able to detect this video as a deepfake straight away? No? No worries! Later on we’ll elaborate further how to detect deepfakes reliably.

The Meaning & History of Deepfakes

The term “deepfake” resulted from merging the words “Deep Learning” and “Fake” highlighting the usage of machine learning methods to create fakes almost autonomously. Due to their potential constructive content, there has been recent effort for change in economy and politics. The goal is to limit the usage of such fake content generating software and also punish any kind of unauthorized creation of “face swapping” or “body-puppetry”. Regulators are driven to establish new laws not solely by the imperative of safeguarding data protection but also by the threat posed to legal and democratic processes beyond the online world. In fact, the use of such deepfakes can be found in various fields of life: politics, arts and especially pornography, where users try to misrepresent certain people using their faces, voices or bodies on display to achieve criminal acts.

Did you know that with 96 %, deepfakes are most prevalent in the porn industry?

Creating (Celebritiy) Deepfakes

The first deepfakes appeared online back in 2017 on the platform Reddit when a user created fakes showing celebrities’ faces on the actors in adult videos. Those deepfakes went viral along with the shared computer code to produce such manipulated videos or images. This led to an explosion of fake content all over social media with celebrities being the initial targets. One of the most famous deepfakes out there is a video called “Synthetizing Obama” where Barack Obama seemingly calls his presidential opponent Donald Trump “a total and complete dipshit”.

In general, it’s important to note that detecting a deepfake is a remarkably intricate and complex challenge, and individuals without an in-depth understanding of the subject can rarely spot them with the naked eye. Therefore, we must turn to alternative methods, where biometrics can be a useful starting point. You can test your ability to identify deepfakes by examining the following deepfake video featuring our VP of Business Development and Marketing, Ann-Kathrin Freiberg. Focus your attention on details such as hair, glasses, and the transition from her face to her neck.

Deepfake Detection through Deep Learning

When it comes to professional deepfakes, it is accurate to say that both the human eye and biometric systems face considerable challenges in their detection. As part of this research, BioID is engaging with a BMBF-funded consortium including multiple universities as well as the German Bundesdruckerei. With this project, the German BMBF (Federal Ministry of Education and Research) supports research for countermeasures to video manipulation and misuse. The target is to develop deepfake detection methods and determine the genuineness of photo and video material. The derived methodologies should generate trust levels that allow utilizing the decisions in court.

You can find more information about our project “FAKE-ID” in the press release.

While traditional approaches using handcrafted features will be developed and analyzed, the most promising techniques involve deep learning. Due to deepfakes originating from artificial intelligence, it seems that their detection needs to rely on the same methods.

Biometric Fraud Prevention

As more and more processes are moving online through digitization and COVID-19, identity credentials need to be derived through trusted processes. Unsupervised identity verification gives way to fraud, and thus needs to incorporate sophisticated anti-spoofing. For both, face-to-face agent-based video verification, as well as fully automated identity verification, deepfakes become a growing challenge. The question therefore arises what can be done against deepfakes with the intent of fraud or identity theft.

Biometric identity proofing with liveness detection can be an approach to defend against such attacks. It is therefore increasingly embraced by organizations striving for their customer’s data security. BioID provides biometric security mechanisms as a software, detecting identity fraud attempts. By implementing secure applications that block virtual cameras and altered video streams as inputs, manipulated photos and videos, such as deepfakes, can be unveiled through the application of liveness detection and facial recognition techniques.

Liveness Detection for Fraud Prevention

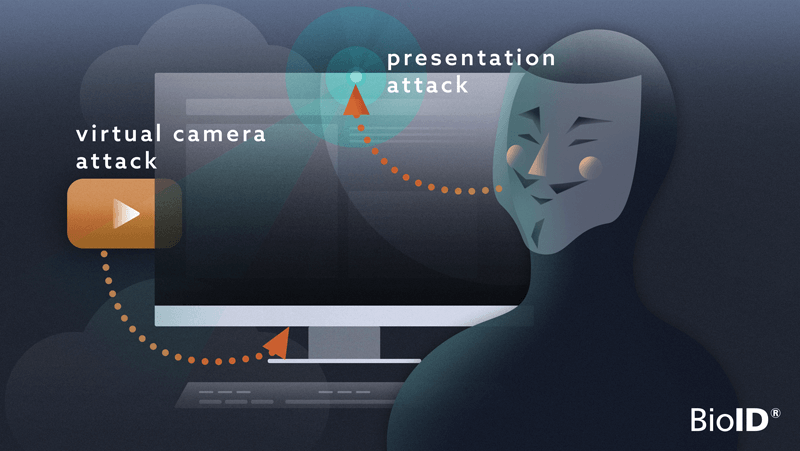

Presentation Attacks

Face liveness detection is an anti-spoofing method for facial biometrics. Scientifically, it is called presentation attack detection (PAD). The core function of a PAD mechanism is to determine whether a biometric feature , was captured from a live person. State-of-the art ISO/IEC 30107-3 compliant liveness detection from BioID prevents biometric fraud by printed photos, cutouts, prints on cloth, 3D paper masks, videos on displays, video projections and more. Deepfakes presented at the sensor level (e.g. on displays) can be rejected through the same BioID methods, e.g. texture analysis and artificial intelligence.

Application Level Attacks

For fighting attacks at the application level, e.g. through the injection of modified camera streams, challenge-response mechanisms can be utilized to reject prerecorded videos/deepfakes. In the future, it will be possible even for non-professionals, to perform feature modification in real-time, projecting a deepfake face onto a live moving face. Therefore, the importance of secure applications that prevent attacks through virtual cameras, will increase even more.

Fortunately, the BioID Liveness Detection prevents presentation attacks through deepfakes with the same level of assurance as any video, animated avatar and the like. The German company’s anti-spoofing is used worldwide by digital identity providers to secure AML/KYC compliant processes. Multiple independent biometric testing laboratories confirm the technology’s ISO/IEC 30107-3 compliance, even resulting in a customer’s FIDO certified solution. BioID Liveness Detection can be tested and evaluated at the BioID Playground.

In combination with a secure app that prevents any virtual camera access and blacklisting the most common virtual cameras, criminals can be prevented from harming individuals’ identities.

Positive Implications of Deepfakes

Like already established, deepfakes can have a deeply destructive character when used for public defamation out of spite or revenge. However, the technologies and algorithms used are not only harming but can also be used for positive change. Visual effects, digital avatars or snapchat filters are just a few examples of positive use cases for deepfakes. For society, the advantages of deepfakes are however greater than that. In education for instance deepfakes could be used to create a more innovative and engaging learning environment. Famous figures like JFK have been used for deepfakes in the past already to offer history lessons in schools. Other fields where deepfakes are of interest is art and the film industry. Here, they are used to create synthetic material in order to tell captivating stories creating avatar-like experiences in some cases. David Beckham used deepfakes to deliver a globally relevant message to broaden its reach. In an effort to make people aware about the disease malaria, his voice was used to let him speak in multiple different languages.

All in all, there are various dimensions to deepfakes and while they can have positive impact, the negative consequences for individuals, politics and society overweigh. Especially because women are often the victims in this case, deepfakes also pose an important gender dimension. Protection women and their reputations should therefore be the primary concern for politicians to pass new laws. The legal route for victims is however tough as the perpetrators often act anonymously, so tracing the deepfakes back to the origin is almost pointless. For this reason, it is essential to tackle the problem at its route and try to prevent the distribution in the first place. Platforms like Instagram, where this expansion of fake content takes place, could be of help in taking action.

Supporting Biometrics and authentication algorithms is crucial in combatting the online threats. By this means, measures against a deepfake and other damaging online defamation attacks can be revealed and the trust in the digital world can be restored.

More information about our company as well as our products helping to prevent such criminal acts can be found here: https://www.bioid.com/deepfake-detection/

Contact us for more information.

Contact

Ann-Kathrin Freiberg

+49 911 9999 898 0

info@bioid.com